Introduction

We keep joking about LLMs not being able to count occurrences of a letter in a word, or perform maths operations. Large Language Models often fail at counting or math because they don’t actually calculate, they predict. Numbers, operators, and results are just tokens, not meaningful values to them. Their reply might be the correct solution, or it might be wrong, but there is no actual calculation performed [1].

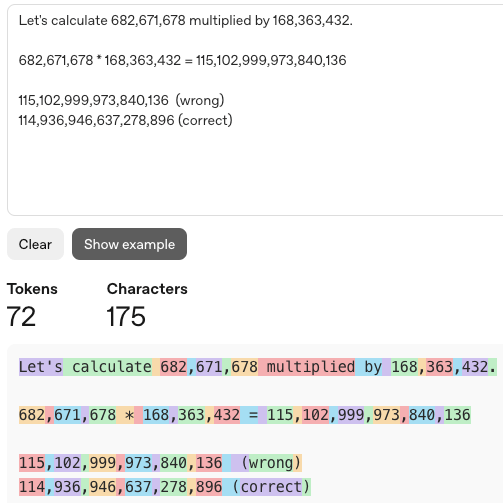

The best way to understand how LLMs really see numbers is to showcase their tokens, for example using OpenAI's tokenizer. This is an example multiplication that Mistral did wrong, alongside the correct result:

Text is above, tokens below. Colors are used only to separate tokens, they don’t indicate any relationship between tokens. As you can see, for a model a large number is simply another sequence of tokens, and when it does not have an exact token sequence representing the correct result, it will reply with other tokens [2]. We humans would see the number either as a sequence of individual digits (in decimal base) where each position has a place value, or as the full number itself: 114,936,946,637,278,896.

But then, are then LLMs merely encyclopedias?

You could also ask why then new models allegedly pass so many benchmarks, exams, and whatnot with increasingly high scores?

Oversimplifying significantly, yes, they are mostly a huge knowledge base with a nice user interface on top as the side effect of working predicting tokens. But, since 2023 (public availability), LLMs got a remarkable new feature that opened the door to way more capabilities. That feature is precisely what makes LLMs able to pass all those benchmarks while not even understanding the concept of counting. Check for example inside OpenAI's GPT-5 announcement the benchmark labels: all the best performing say "<some-model-name> (python)" [3]. Not long ago, all models got the feature of tool calling, allowing them to interact with other things apart from their vast "data". And this is the magic trick that allows LLMs to not need to learn to count (or similar actions).

If you don't know how to perform mathematical actions, but you know how to generate snippets of code, execute it ("tool calling"), and read any output, you can get correct results. This is the same concept as using a scientific calculator: I don't remember any more how to calculate integrals or derivatives, but I can input the numbers in the calculator, and read and interpret the results.

Applying the magic trick

I feel that we are being sold at times too much misinformation and hype, but the tools already available are very powerful. I don't need a GPT-5 Pro to get proper maths responses, but, out of the box, most if not all current models won't be reliable. A system message altering the behaviour of our model by guiding it to use more tool calling unlocks it.

In fact, with simple a Cursor rule or VS Code GitHub Copilot set of instructions such as the following, you can see the magic in action:

When the USER asks a question, identify if it is related with mathematics, statistics, or similar topic that could be codified into a Python script. If so, generate a Python script that can be used to answer the question and execute it, to answer the question. If not, answer the question as best as possible.

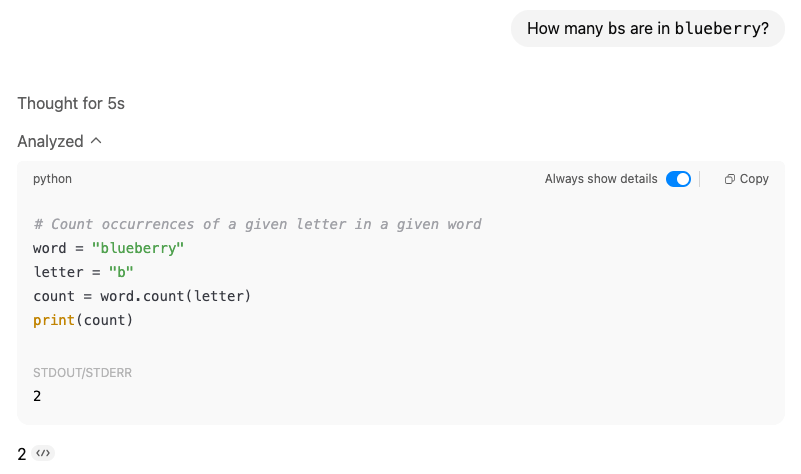

Example: If the USER asks "how many `b` letters are in the word `blueberry`?", do not directly answer the question, but generate a Python script that counts occurrences of a given letter in a given word, run it with the letter "b" and the word "blueberry", and interpret the result as the answer.

Example: If the USER asks "what is 123456789 multiplied by itself?, generate a Python script such as `print(123456789 * 123456789)` and based on the result you see after executing that script.

The Python script is for your internal use. Show it to the USER and ALWAYS execute it, but do not save it as a file. Run it as an inline Python script. Always use `python3` as the interpreter.

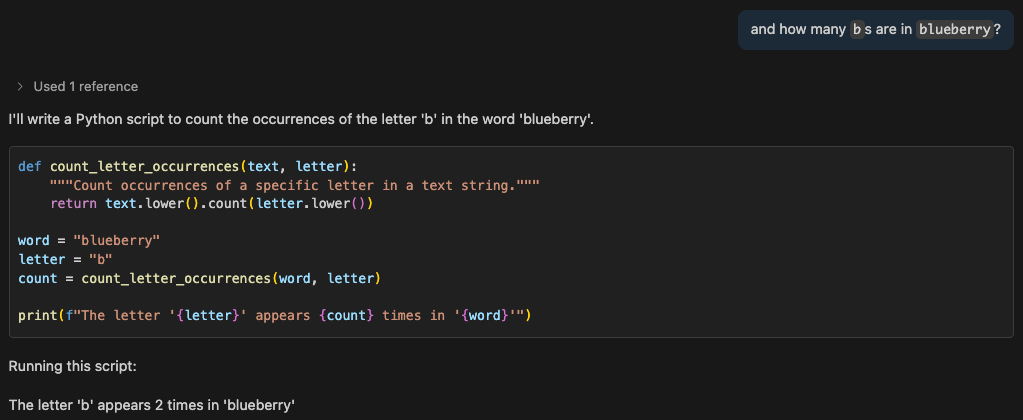

Here is my Cursor IDE (in Ask mode) now counting letters in sentences:

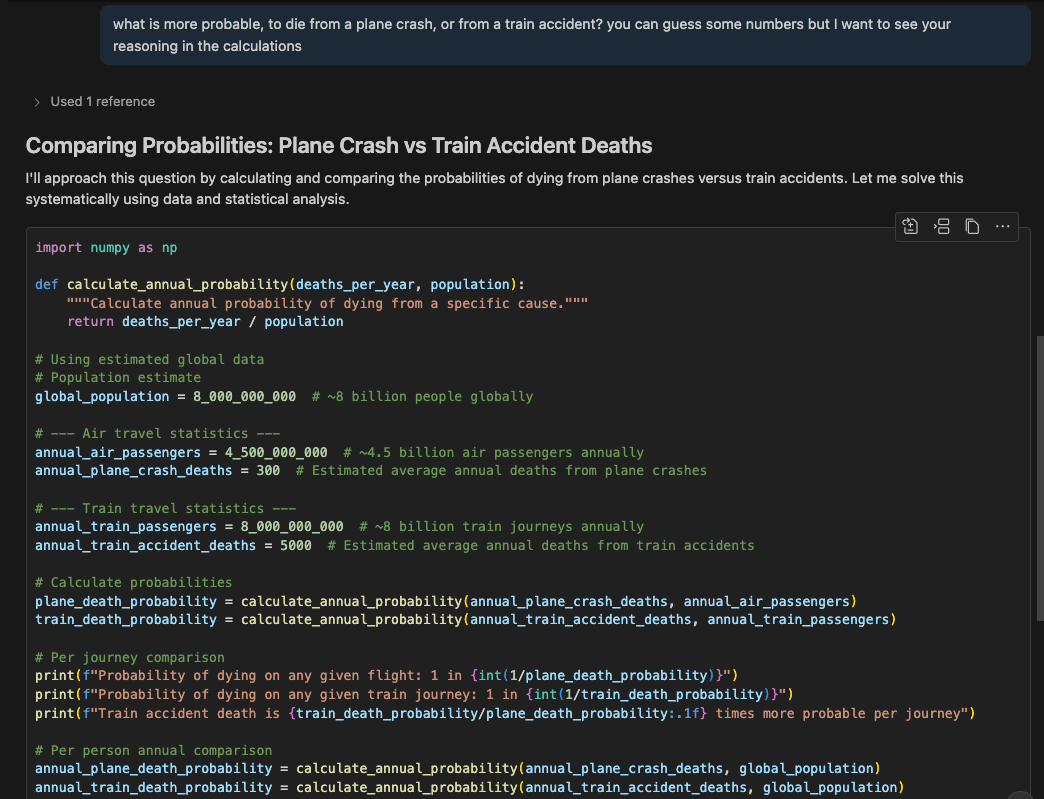

And here is GH Copilot in VS Code (in Ask mode) now even using numpy to calculate some probabilities:

I tried asking to recalculate with different values:

recalculate with some different values: 9.5 billion annual_air_passengers , 318 air deaths, 14.3 billion annual_train_passengers, 1900 train deaths

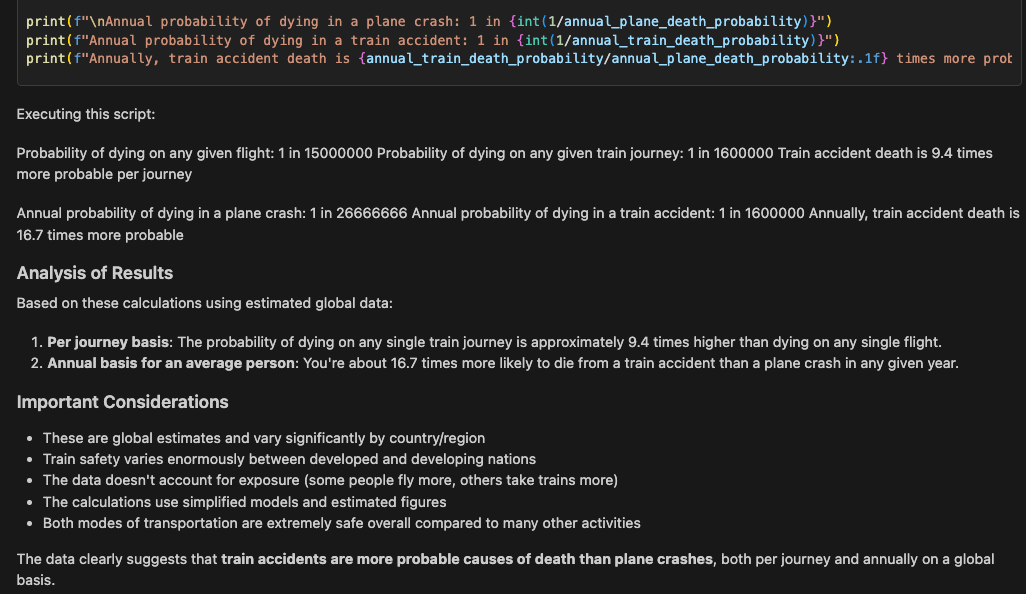

It re-ran the script with the proper values (omitting here for brevity), and came up with the new results and a nice comparison:

I'll recalculate the probabilities with your new values.

Let me update the script with these more precise numbers.

[script code]

Running this script:

Probability of dying on any given flight: 1 in 29,874,214

Probability of dying on any given train journey: 1 in 7,526,316

Train accident death is 4.0 times more probable per journey

Annual probability of dying in a plane crash: 1 in 25,157,233

Annual probability of dying in a train accident: 1 in 4,210,526

Annually, train accident death is 6.0 times more probable

Analysis of Updated Results

With these revised numbers, the comparison shows:

1. Per journey basis: The probability of dying on any single train journey

is approximately 4.0 times higher than dying on any single flight.

2. Annual basis for an average person: You're about 6.0 times more likely

to die from a train accident than a plane crash in any given year.

Key Observations

- The relative risk difference has decreased compared to the previous calculation

(from 9.4× to 4.0× per journey)

- Flying remains safer than train travel on a per-journey basis

- Both transportation modes remain extremely safe overall

(probabilities of 1 in millions)

- These global averages mask significant regional variations in safety standards

- The risk factors are still strongly in favor of air travel being safer than train travel.

Finally, it also worked in ChatGPT (GPT5 model), but the results tend to be more varying.

With counting letters, it gave me the most succinct responses from all of my experiments:

When asked about the plane vs train mortality rates, it built a big Python script using pandas and numpy, giving the results with more granularity, mortality per kilometre travelled via each transport mechanism. But it successfully interpreted them and the numbers were accurate, based on its source (guessed) values.

It's already happening

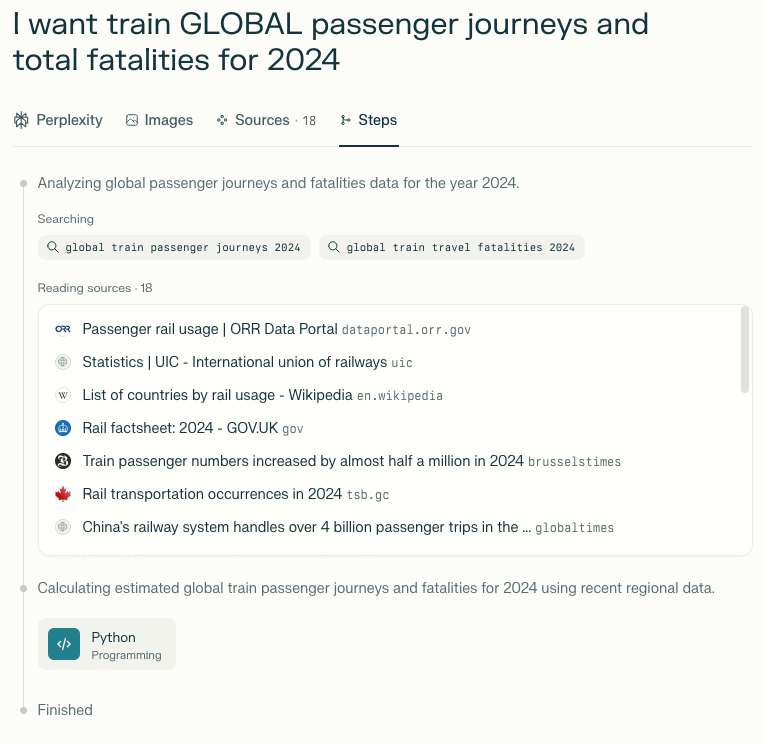

If you ask Perplexity about statistics of let's say global train passengers in 2024, it will run a "Python Programming" step to aggregate the data it fetches:

Similarly, Cursor not long ago began to create and execute small inline scripts of Python at times, instead of relying too much on system tools, to run in isolation certain tests or code fragments, and similar scenarios. This was my inspiration regarding how to make any LLM use tool calling in a general way.

As of mid-2025, most LLMs now also frequently perform "web searches", and MCP servers are a glorified API calling mechanism for LLMS. Both actions are enabled via tool calling.

Conclusion

Tool calling lets LLM combine language understanding with precise computation, giving us more reliable results without models needing to learn arithmetic.

As an additional note, Large Language Models often pass many exam or benchmark questions by applying pattern matching to their vast memorized knowledge base. Tool calling extends this ability beyond memory and similarity, enabling them to perform computations.

Footnotes

[1] In the case of standard LLMs using forward pass. Based on my limited knowledge, models that do chain-of-thought and delayed/latent reasoning can have certain token-level symbolic reasoning.

[2] A note: LLMs can learn statistical patterns involving numbers, in the same way they learn patterns about car parts or the associations of male and female names, without inherently understanding the concepts themselves.

[3] Python is just the language LLMs generate code easier for, but it is not the only option.

Tags: AI & ML Automation Development Python Tools