2025/07 Update: It seems my idea wasn't that crazy at all. From Cursor v1.2's changelog: Agents now plan ahead with structured to-do lists, making long-horizon tasks easier to understand and track.

Background

From streamlining everyday tasks to unlocking groundbreaking innovations, AI is fundamentally reshaping what we can create and how we work. From helpful chat assistants to coding companions like GitHub Copilot, and the versatile capabilities of OpenAI and Gemini models, we have a myriad of ways to include AI into workflows. Now, with agentic IDEs like Cursor, any engineer has access to a development environment that leverages AI agents to automate non-trivial tasks.

When you want to improve the developer experience, there's no closer place to be than directly inside the IDE, which also helps to reduce context switching. Cursor possesses powerful agentic capabilities, enabling us to experiment with not only automated tasks but even complete workflows. At the same time, since agentic workflows and Large Language Models are still pretty new and evolving, it's a good idea to explore other ways to learn about their limitations and experiment on how to handle them.

Why?

Cursor has a context window, like all LLMs. While context windows in all major LLMs are increasing in size very rapidly, Cursor's performance degrades over longer sessions; it may struggle to recall project details or how to execute tasks it previously handled with ease. Cursor's suggestion to start a new chat periodically (a recommendation that was more prominent in earlier versions) further suggests that its context window has limitations. This limitation is similar to how human memory works.

To combat forgetfulness, we rely on external aids: we write things down. So, how can we make Cursor requests stateful?

Experiment 1: Let's use local files

I did an early experiment to validate my idea: Use a markdown files-based approach. I chose markdown because LLMs fully understand it, and it is a very lightweight format.

Adopting a minimal Kanban workflow, mostly due to ease of implementation, I considered organizing tasks within three files: 'todo' for pending items, 'WIP' for the current task in progress (allowing only one at a time), and 'done' for completed tasks.

After a few iterations, the following Cursor Rule worked fine with a few rounds of asking the IDE to do multiple tasks based on a single prompt. It also worked when I manually edited the todo.md file and added a task or two.

use-kanban.mdc file:

---

description:

globs:

alwaysApply: true

---

# Keep track of your thoughts and actions

1. As part of your thought process and actions, you will always check the

contents of the file `kanban/todo.md` (your pending/TODO tasks) and the file

`kanban/wip.md` (your current task in-progress, WIP).

2. If you already have something in the `kanban/wip.md` file:

- You will first offload any new task to the `kanban/todo.md` file, by

appending them to the end of that file.

3. If you did not have anything in the `kanban/wip.md` file:

- You will add the new task to `kanban/wip.md`.

4. You will then proceed with the task described in the `kanban/wip.md` file.

5. When you finish with that task, you will move it from `kanban/wip.md`

to `kanban/done.md`

, by deleting the entry in the file `kanban/wip.md` (empty the file,

but do not delete it) and appending it to the end of the `kanban/done.md` file.

6. Afterwards, you will pick the first line in the `kanban/todo.md` file,

if they were any, and "move" it to `kanban/wip.md` file.

7. You will then proceed to step 4. of this list (proceed with the task

described in the `kanban/wip.md` file), until `kanban/todo.md` file is empty.

8. Then you can finish any other current action/commands.

Tasks will be added to the files one per line, starting with `-`,

and ending in a newline.

Example of an empty todo.md file:

```

```

Example of a todo.md file with two pending tasks:

```

- Task 1

- Task 2

```

The `wip.md` file should never have more than at most one task.

The initial experiment succeeded: We've confirmed that adding state to Cursor is feasible. The next step is to improve the workflow for better usability and avoid reliance on local setups.

Experiment 2: Let's use GitHub issues

Model Context Protocol (MCP) servers function as "APIs for LLMs," enabling agents to perform diverse actions through appropriate MCPs. These can interact either with local content, or external services.

I performed this second experiment using GitHub's official MCP server, with an Access Token generated from my GH account, and with a small repository generated for the experiment. GitHub Issues represent a simple, readily available, and realistic way to store and manage state information for this experiment.

Note that there are no specific Cursor setup instructions in the GitHub MCP repository, but following the “Claude Desktop” ones does the trick.

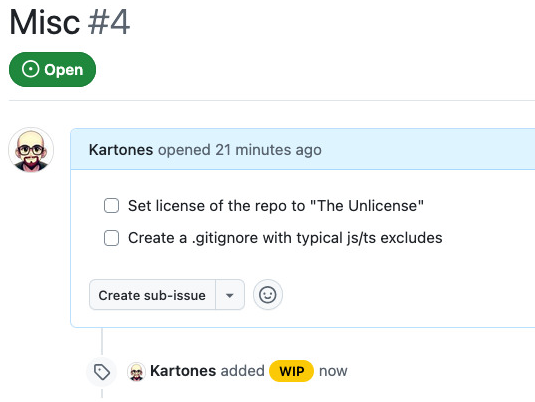

I created an empty repository with a few GitHub issues, each a small list of small features and/or fixes. The issues are now resolved and closed, but you can still access them in the experimentation repository for reference if you're interested.

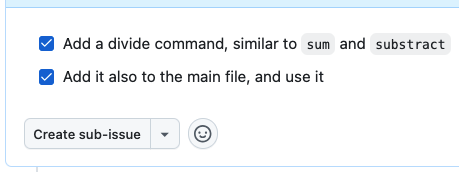

The issue format consisted of a straightforward listing of subtasks, each in the form of a checkbox + description (standard in GitHub to denote sub-tasks). This design was implemented with the intention of prompting the agent to follow detailed steps. For example:

I also created a GitHub wip label, to adapt to the fact that GitHub issues only have two states (open and closed). I hoped that the agent would be able to utilize them via the official MCP.

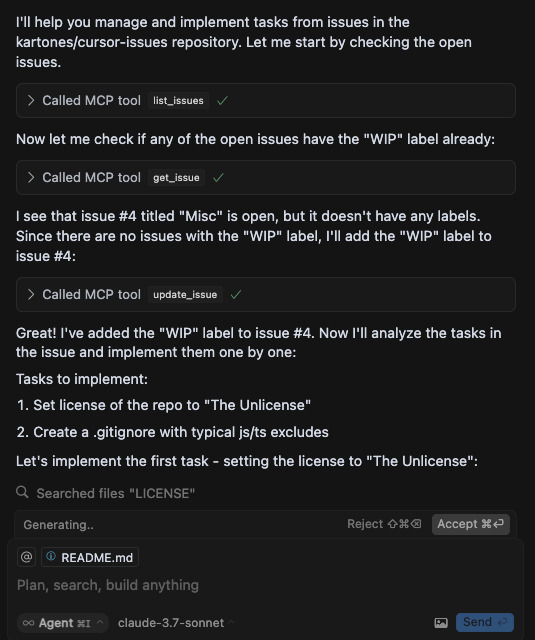

The true test arrived when I wrote a prompt and allowed the agent to execute it. It somewhat worked, but required some tweaks. Following some adjustments, such as guiding it to correctly modify issue content while maintaining the format, this became my final prompt:

use-kanban.mdc revised file:

- Check the open issues of the Github repository `kartones/cursor-issues`.

- If there is none open with the "WIP" label, then a) Get the oldest one and

b) Add the "WIP" label to it.

- Analyze the description, and implement each task mentioned on it.

- After implementing each task of the issue, edit the issue in Github to mark

that task as completed. To mark it as complete, check it with an `x` inside the

`[ ]` of the task. When editing the body of the task,

if using the `gh` tool, use the following syntax:

`echo -e "<issue-content-with-multilines>" | gh issue edit

<issue-number> --body-file -`.

- When all the tasks of the issue are completed:

a) commit the changes

b) push the newly created commit

c) remove the "WIP" label from the issue. If you can't remove it via the MCP

tool `update_issue` call, then do it via the `gh` tool.

d) close the issue

Another side-note: The GitHub MCP server seems not to be able to remove tags. To address this, the official GitHub CLI tool, gh, was installed and integrated with Cursor, which supports everything.

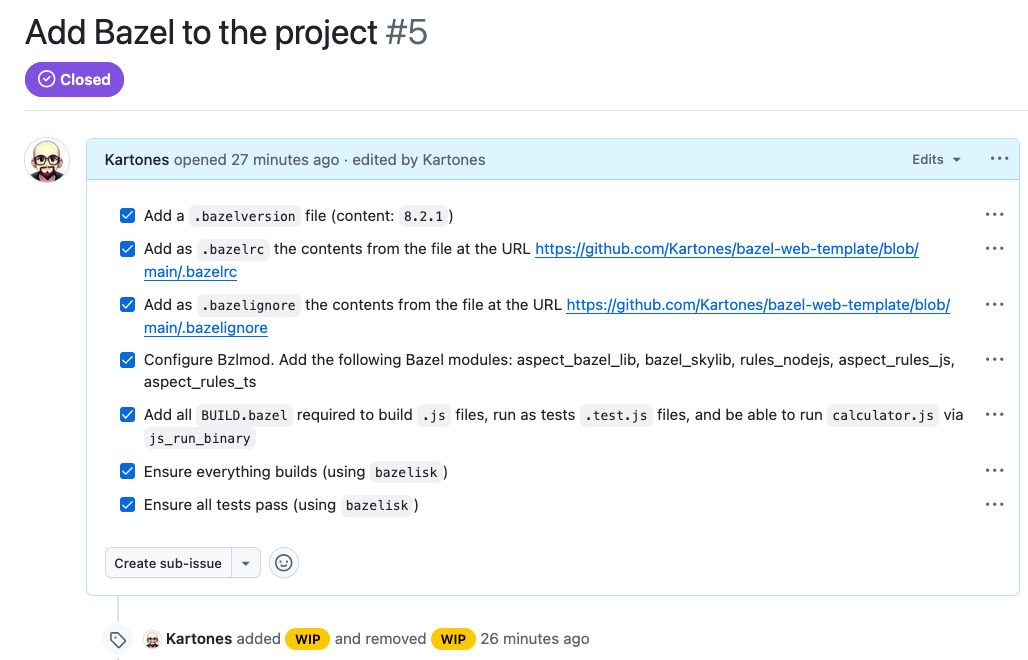

I ran it with a few more issues, for example:

The most relevant aspect of the output was the following:

To fully automate the process, my prompt includes committing and pushing changes with clear and concise commits, using the conventional commits specification. I've created this simple Cursor rule to ensure that this format is always followed.

The agent's ability to access documentation and files allows for the copying or adaptation of existing content based on user instructions. Here is a more complex issue:

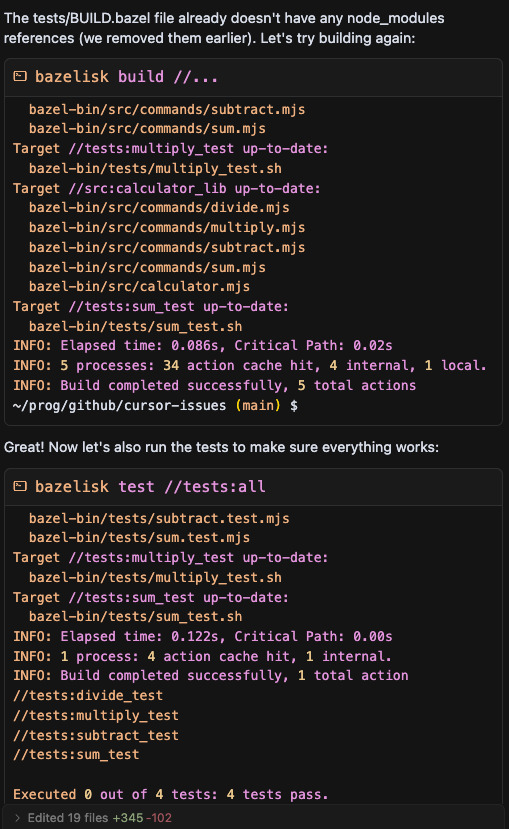

It worked and actually produced a working bazelisk build and bazelisk run scenario. While Cursor encountered initial difficulties with the Bazel repository setup for web rules, I believe that they can be resolved through providing improved documentation.

One last example follows, Cursor re-running the tests via Bazel, after I told it to remove the node_modules folder from the source repository. Because of not having a .gitignore file, at first it committed everything, and I had to instruct it to create the file and remove the now ignored content:

Cursor sometimes runs the tests based on its own "judgement", but it can also be configured to automatically re-run tests after code changes using a custom rule, like this one. Furthermore, by combining this rule with a user-defined rule such as "always create tests first", the agent can effectively support a Test-Driven Development (TDD) workflow.

Conclusion

Both experiments suggest that Cursor is more than capable of supporting Kanban or comparable workflows. Crucially, its ability to retain state allows for the continuation of tasks, creating some resilience against context limitations.

The proposed system meticulously tracks completed tasks and associated commits, providing comprehensive work logs.

If you are interested in further enhancing this feature, you could explore how to modify the commits rule to automatically include the corresponding GitHub ticket ID within each commit message 🤓