When you build software, many times you think about edge cases, limits, race conditions, but mostly in the lower bounds: Division by zero, no input, wrong input ending in no valid data...

But there are upper bounds too, which usually create nasty scenarios: Out of memory errors, no hard disk space available, the operating system killing a process thinking it "hang up" eating all CPU, buffer overflows...

You should set a hard limit for most stuff, even if it's an insane one, so you get covered in those situations. But hard limit doesn't means reasonable limit.

For example, at CartoDB we face data imports of CSV and Excel files that sometimes weigth hudnreds of megabytes. You always have quota limits, so we won't process a file bigger than your available disk quota, but have you stopped to think what does represent 500MB?

I have a real world scenario of an apparently harmless 7MB XLSX file, which contains inside ~200K rows, each with 4 columns. I also have seen CSVs that stored 2-3 million rows in less than 500MB.

Any of this cases requires a lot of processing only to open it. Even native applications like OpenOffice struggle for minutes at 100% CPU. So imagine a system that opens the file, reads the whole contents, normalizes and sanitizes those contents, inserts the data into a DB, and then performs georeferencing and other calculations.

The sad reality is that in general, most huge imports will fail because they reach our hard limits, but usually that will happen after wasting minutes not only of processing but also of user time, waiting for the import to finish. And while we can always explain the user if complains that he should split those huge files in smaller ones, import individually and then merge the data into a table (once inside PostgreSQL, there's no problem having millions of rows in the same place)... It would be better to preventively detect unreasonable scenarios, don't you think so?

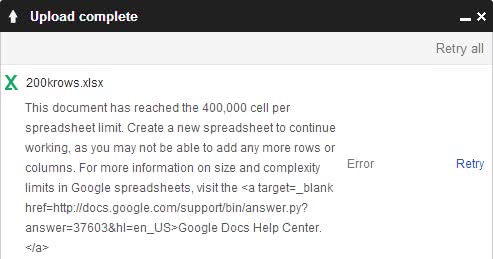

Also, it is in human nature to compare, so after a colleage told me to see how Google Drive handled huge Excel conversions, I got another "signal" it was the way to go. This is what happens when you try to upload huge XLS/XLSX files to GDrive and wait between 12 to 15 secs:

It seems Google guesses the size, and if is off-limits, it gives an error. Activating the beta new Google Sheets didn't helped, the same file kept failing.

But what is funnier is that Google really guesses instead of checking the actual contents, because I opened the file, left ~398K cells (less than 200k rows with only two columns) and it kept complaining about the 400k cells limit.

So, the mighty Google fails to import those files, and it fails quite fast: 12-15 seconds.

Well, I'm curious, so I decided to dissasemble one XLSX, read about its format and see if I could do a fast scan to estimate the size myself, like Google apparently does (either that or they use powerful machines but if they take more than X seconds kill the conversion).

I was lucky because in the worksheets, inside each sheet's XML file, there is a handy tag around the first 512 bytes that says something like this:

<dimension ref="A1:D202464"/>

Which means that roughtly this worksheet will contain 4 columns and 202464 rows, a total of 809856 cells.

I just needed some Ruby code to:

- Open the XLSX (which is a ZIP file)

- Extract the first worksheet to a temporally folder

- Read the first 512 bytes

- Extract that dimension

- Calculate the total cells of the sheet

By far the simplest part, so although the code is missing error handling, documentation and tests to make sure it always work as intended, you can check my xlsx-inspector class at my GitHub.

Best of all is that it takes just 2 seconds on my laptop to inspect the same file google takes 12 secs minimum, and returns more a accurate estimation.

I plan to build a gem and publish it so it is easier for us to include it at CartoDB while I keep it updated, but meanwhile you can just download the code. It also only needs a single gem (rubyzip).

Probably now doing this "detection" logic in pure C/C++ would take a fraction of a second, but as our main stack is either Ruby or Javascript I'll leave it as it is.

So, if are not going to be able to process something, if you can't win, fail fast and save resources.

Bonus track:

To do a dirty but fast CSV estimation, use Linux command "wc -l yourfile.csv" to get the amount of rows. If you want to multiply by the number of columns, then you have to detect the field delimiter and then calculate the number of cols.